Security News

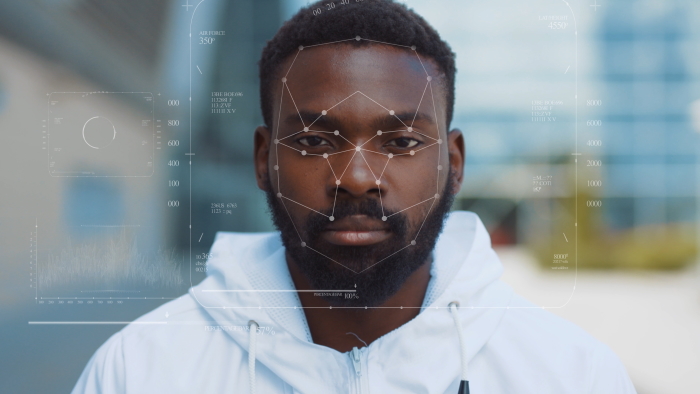

South Wales Police and the UK Home Office "Fundamentally disagree" that automated facial recognition software is as intrusive as collecting fingerprints or DNA, a barrister for the force told the Court of Appeal yesterday. Jason Beer QC, representing the South Wales Police also blamed the Information Commissioner's Office for "Dragging" the court into the topic of whether the police force's use of the creepy cameras complied with the Data Protection Act.

Lawmakers have proposed legislation that would indefinitely ban the use of facial recognition technology by law enforcement nationwide. While various cities have banned government use of the technology, the bill would be the first temporary ban on facial recognition technology ever enacted nationwide.

A top judge told a barrister for the UK Information Commissioner's Office today that his legal arguments against police facial-recognition technology face "a great difficulty" as he wondered whether they were even relevant to the case. In plain English, Facenna was saying that South Wales Police's legal justification for deploying facial-recognition tech, as detailed yesterday, didn't comply with the Human Rights Act-guaranteed right to privacy - nor the Data Protection Act 2018 section, which states: "The processing of personal data for any of the law enforcement purposes is lawful only if and to the extent that it is based on law."

More than 1,000 technology experts and academics from organizations such as MIT, Microsoft, Harvard and Google have signed an open letter denouncing a forthcoming paper describing artificial intelligence algorithms that can predict crime based only on a person's face, calling it out for promoting racial bias and propagating a #TechtoPrisonPipeline. The paper describes an "Automated computer facial recognition software capable of predicting whether someone is likely going to be a criminal," according to a press release about the research.

Tech giants love to portray themselves as forces for good and as the United States was gripped by anti-racism protests a number of them publicly disavowed selling controversial facial recognition technology to police forces. The technology has a dark side, with facial recognition integrated into China's massive public surveillance system and its social credit experiment where even minor infractions of public norms can result in sanctions.

Microsoft is joining Amazon and IBM when it comes to halting the sale of facial recognition technology to police departments. "We will not sell facial recognition tech to police in the U.S. until there is a national law in place We must pursue a national law to govern facial recognition grounded in the protection of human rights," Smith said during a virtual event hosted by the Washington Post.

While newer regulations like the EU's General Data Protection Regulation and the California Consumer Privacy Act are steps in the right direction to protect consumer privacy, there is a need for tighter regulation for facial recognition technology. Facial recognition vs. facial authentication.

The company now says its masked facial recognition program has reached 95 percent accuracy in lab tests, and even claims that it is more accurate in real life, where its cameras take multiple photos of a person if the first attempt to identify them fails. Counter-intuitively, training facial recognition algorithms to recognize masked faces involves throwing data away.

In the midst of the ongoing coronavirus pandemic, facial recognition technology is being adopted globally as a way to track the virus' spread. But privacy experts worry that, in the rush to implement COVID-19 tracking capabilities, important and deep rooted issues around data collection and storage, user consent, and surveillance will be brushed under the rug. "While facial recognition technology provides a fast and zero-contact method for identifying individuals, the technology is not without risks. Primarily, individuals scanned by facial recognition services need to be aware of how their data is being used."

In the midst of the ongoing coronavirus pandemic, facial recognition technology is being adopted globally as a way to track the virus' spread. But privacy experts worry that, in the rush to implement COVID-19 tracking capabilities, important and deep rooted issues around data collection and storage, user consent, and surveillance will be brushed under the rug. "While facial recognition technology provides a fast and zero-contact method for identifying individuals, the technology is not without risks. Primarily, individuals scanned by facial recognition services need to be aware of how their data is being used."