Security News

![S3 Ep53: Apple Pay, giftcards, cybermonth, and ransomware busts [Podcast]](/static/build/img/news/s3-ep53-apple-pay-giftcards-cybermonth-and-ransomware-busts-podcast-small.jpg)

LISTEN NOW. Click-and-drag on the soundwaves below to skip to any point in the podcast. WHERE TO FIND THE PODCAST ONLINE. You can listen to us on Soundcloud, Apple Podcasts, Google Podcasts, Spotify, Stitcher and anywhere that good podcasts are found.

The Authority for Consumers and Markets in the Netherlands is pressing Apple to lift App Store payment restrictions in the country. ACM hasn't published a relevant report on its portal yet, but Reuters claims that the antitrust authority has already warned Apple to lift the in-app payment restrictions over a month ago.

All third-party iOS, iPadOS, and macOS apps that allow users to create an account should also provide a method for terminating their accounts from within the apps beginning next year, Apple said on Wednesday. "This requirement applies to all app submissions starting January 31, 2022," the iPhone maker said, urging developers to "Review any laws that may require you to maintain certain types of data, and to make sure your app clearly explains what data your app collects, how it collects that data, all uses of that data, your data retention/deletion policies."

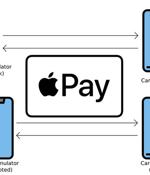

Cybersecurity researchers have disclosed an unpatched flaw in Apple Pay that attackers could abuse to make an unauthorized Visa payment with a locked iPhone by taking advantage of the Express Travel mode set up in the device's wallet. Express Travel is a feature that allows users of iPhone and Apple Watch to make quick contactless payments for public transit without having to wake or unlock the device, open an app, or even validate with Face ID, Touch ID or a passcode.

Apple's digital wallet Apple Pay will pay whatever amount is demanded of it, without authorization, if configured for transit mode with a Visa card, and exposed to a hostile contactless reader. Boffins at the University of Birmingham and the University of Surrey in England have managed to find a way to remove the contactless payment limit on iPhones with Apple Pay and Visa cards if "Express Transit" mode has been enabled.

Apparent flaw allows hackers to steal money from a locked iPhone, when a Visa card is set up with Apple Pay Express Transit. Express Transit makes Apple Pay and your iPhone work a bit like a regular credit card, which doesn't need unlocking with a PIN code for low-value transactions.

An attacker who steals a locked iPhone can use a stored Visa card to make contactless payments worth up to thousands of dollars without unlocking the phone, researchers are warning. The problem is due to unpatched vulnerabilities in both the Apple Pay and Visa systems, according to an academic team from the Universities of Birmingham and Surrey, backed by the U.K.'s National Cyber Security Centre.

An attacker who steals a locked iPhone can use a stored Visa card to make contactless payments worth up to thousands of dollars without unlocking the phone, researchers are warning. The problem is due to unpatched vulnerabilities in both the Apple Pay and Visa systems, according to an academic team from the Universities of Birmingham and Surrey, backed by the U.K.'s National Cyber Security Centre.

Academic researchers have found a way to make fraudulent payments using Apple Pay from a locked iPhone with a Visa card in the digital wallet with express mode enabled. Apple Pay solved the problem with Express Transit, a feature that allows a transaction to go through without unlocking the device.

An unpatched stored cross-site scripting bug in Apple's AirTag "Lost Mode" could open up users to a cornucopia of web-based attacks, including credential-harvesting, click-jacking, malware delivery, token theft and more. If it's further afield, the AirTag sends out a secure Bluetooth signal that can be detected by nearby devices in Apple's Find My network.