Security News

Similarly to shadow IT, shadow AI refers to all the AI-enabled products and platforms being used within your organization that those departments don't know about. Establishing a risk matrix for AI use within your organization and defining how it will be used will allow you to have productive conversations around AI usage for the entire business.

When tech billionaires and corporations steer AI, we get AI that tends to reflect the interests of tech billionaires and corporations, instead of the public. To benefit society as a whole we need an AI public option-not to replace corporate AI but to serve as a counterbalance-as well as stronger democratic institutions to govern all of AI. Like public roads and the federal postal system, a public AI option could guarantee universal access to this transformative technology and set an implicit standard that private services must surpass to compete.

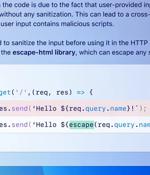

GitHub on Wednesday announced that it's making available a feature called code scanning autofix in public beta for all Advanced Security customers to provide targeted recommendations in an effort...

GitHub introduced a new AI-powered feature capable of speeding up vulnerability fixes while coding. Known as Code Scanning Autofix and powered by GitHub Copilot and CodeQL, it helps deal with over 90% of alert types in JavaScript, Typescript, Java, and Python.

Did you know that 79% of organizations are already leveraging Generative AI technologies? Much like the internet defined the 90s and the cloud revolutionized the 2010s, we are now in the era of...

To effectively safeguard these new environments, cybersecurity teams need to understand the shifting nuances of red teaming in the context of AI. Understanding what's changed with AI is an essential starting point to guide red teaming efforts in the years ahead. Why AI flips the red teaming script. Because the abilities of these models increase over time, cyber teams are no longer red teaming a static model.

NVIDIA's newest GPU platform is the Blackwell, which companies including AWS, Microsoft and Google plan to adopt for generative AI and other modern computing tasks, NVIDIA CEO Jensen Huang announced during the keynote at the NVIDIA GTC conference on March 18 in San Jose, California. Along with the Blackwell GPUs, the company announced the NVIDIA GB200 Grace Blackwell Superchip, which links two NVIDIA B200 Tensor Core GPUs to the NVIDIA Grace CPU - providing a new, combined platform for LLM inference.

Large language models (LLMs) powering artificial intelligence (AI) tools today could be exploited to develop self-augmenting malware capable of bypassing YARA rules. "Generative AI can be used to...

There is a lot we can learn about social media's unregulated evolution over the past decade that directly applies to AI companies and technologies. These lessons can help us avoid making the same mistakes with AI that we did with social media.

The U.S. Securities and Exchange Commission announced today that two investment advisers, Delphia and Global Predictions, have settled charges of making misleading statements regarding the use of artificial intelligence technology in their products. Both companies have agreed to pay $400,000 in civil penalties for their "Al washing" activities: Delphia will pay a civil penalty of $225,000, while Global Predictions will pay $175,000.