Security News

AI platform Hugging Face says that its Spaces platform was breached, allowing hackers to access authentication secrets for its members. Hugging Face Spaces is a repository of AI apps created and submitted by the community's users, allowing other members to demo them.

Artificial Intelligence (AI) company Hugging Face on Friday disclosed that it detected unauthorized access to its Spaces platform earlier this week. "We have suspicions that a subset of Spaces’...

We don't know how far AI will go in replicating or replacing human cognitive functions. Again, I am less interested in how AI will substitute for humans.

OpenAI on Thursday disclosed that it took steps to cut off five covert influence operations (IO) originating from China, Iran, Israel, and Russia that sought to abuse its artificial intelligence...

Your profile can be used to present content that appears more relevant based on your possible interests, such as by adapting the order in which content is shown to you, so that it is even easier for you to find content that matches your interests. Content presented to you on this service can be based on your content personalisation profiles, which can reflect your activity on this or other services, possible interests and personal aspects.

The program comes shortly after several recent announcements by NIST around the 180-day mark of the Executive Order on trustworthy AI and the U.S. AI Safety Institute's unveiling of its strategic vision and international safety network. "With the ARIA program, and other efforts to support Commerce's responsibilities under President Biden's Executive Order on AI, NIST and the U.S. AI Safety Institute are pulling every lever when it comes to mitigating the risks and maximizing the benefits of AI," Raimondo continued.

Your profile can be used to present content that appears more relevant based on your possible interests, such as by adapting the order in which content is shown to you, so that it is even easier for you to find content that matches your interests. Content presented to you on this service can be based on your content personalisation profiles, which can reflect your activity on this or other services, possible interests and personal aspects.

Chronon is an open-source, end-to-end feature platform designed for machine learning teams to build, deploy, manage, and monitor data pipelines for machine learning. Chronon enables you to harness all the data within your organization, including batch tables, event streams, and services, to drive your AI/ML projects without the need to manage the typically required orchestration.

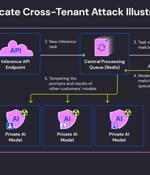

Cybersecurity researchers have discovered a critical security flaw in an artificial intelligence (AI)-as-a-service provider Replicate that could have allowed threat actors to gain access to...

One of the key aims was to move progress towards the formation of a global set of AI safety standards and regulations. U.K. Technology Secretary Michelle Donelan said in a closing statement, "The agreements we have reached in Seoul mark the beginning of Phase Two of our AI Safety agenda, in which the world takes concrete steps to become more resilient to the risks of AI and begins a deepening of our understanding of the science that will underpin a shared approach to AI safety in the future."