Security News > 2025 > March > New ‘Rules File Backdoor’ Attack Lets Hackers Inject Malicious Code via AI Code Editors

2025-03-18 15:43

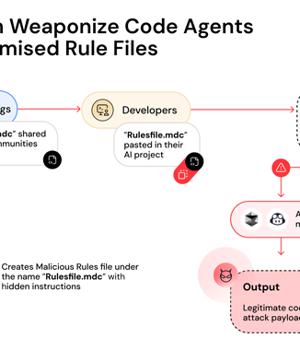

Cybersecurity researchers have disclosed details of a new supply chain attack vector dubbed Rules File Backdoor that affects artificial intelligence (AI)-powered code editors like GitHub Copilot and Cursor, causing them to inject malicious code. "This technique enables hackers to silently compromise AI-generated code by injecting hidden malicious instructions into seemingly innocent

News URL

https://thehackernews.com/2025/03/new-rules-file-backdoor-attack-lets.html

Related news

- Chinese Hackers Deploy MarsSnake Backdoor in Multi-Year Attack on Saudi Organization (source)

- Developers Beware: Slopsquatting & Vibe Coding Can Increase Risk of AI-Powered Attacks (source)

- Hackers Abuse Russian Bulletproof Host Proton66 for Global Attacks and Malware Delivery (source)

- Hackers abuse Zoom remote control feature for crypto-theft attacks (source)

- DPRK Hackers Steal $137M from TRON Users in Single-Day Phishing Attack (source)

- Lazarus hackers breach six companies in watering hole attacks (source)

- Wallarm Agentic AI Protection blocks attacks against AI agents (source)

- China is using AI to sharpen every link in its attack chain, FBI warns (source)

- Chinese Hackers Abuse IPv6 SLAAC for AitM Attacks via Spellbinder Lateral Movement Tool (source)

- Deploying AI Agents? Learn to Secure Them Before Hackers Strike Your Business (source)