Security News > 2023 > September > Microsoft AI Researchers Accidentally Expose 38 Terabytes of Confidential Data

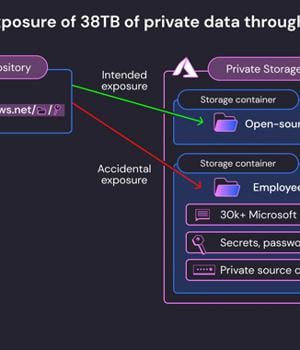

Microsoft on Monday said it took steps to correct a glaring security gaffe that led to the exposure of 38 terabytes of private data.

"The exposure came as the result of an overly permissive SAS token - an Azure feature that allows users to share data in a manner that is both hard to track and hard to revoke," Wiz said in a report.

Specifically, the repository's README.md file instructed developers to download the models from an Azure Storage URL that accidentally also granted access to the entire storage account, thereby exposing additional private data.

In response to the findings, Microsoft said its investigation found no evidence of unauthorized exposure of customer data and that "No other internal services were put at risk because of this issue." It also emphasized that customers need not take any action on their part.

"Due to the lack of security and governance over Account SAS tokens, they should be considered as sensitive as the account key itself," the researchers said.

"AI unlocks huge potential for tech companies. However, as data scientists and engineers race to bring new AI solutions to production, the massive amounts of data they handle require additional security checks and safeguards," Wiz CTO and co-founder Ami Luttwak said in a statement.

News URL

https://thehackernews.com/2023/09/microsoft-ai-researchers-accidentally.html

Related news

- Microsoft raises rewards for Copilot AI bug bounty program (source)

- Microsoft names cybercriminals behind AI deepfake network (source)

- Microsoft Exposes LLMjacking Cybercriminals Behind Azure AI Abuse Scheme (source)

- Microsoft wouldn't look at a bug report without a video. Researcher maliciously complied (source)

- AI agents swarm Microsoft Security Copilot (source)

- Microsoft’s new AI agents take on phishing, patching, alert fatigue (source)

- After Detecting 30B Phishing Attempts, Microsoft Adds Even More AI to Its Security Copilot (source)

- Week in review: Chrome sandbox escape 0-day fixed, Microsoft adds new AI agents to Security Copilot (source)

- Microsoft uses AI to find flaws in GRUB2, U-Boot, Barebox bootloaders (source)

- April 2025 Patch Tuesday forecast: More AI security introduced by Microsoft (source)