Security News > 2023 > September > LLM Guard: Open-source toolkit for securing Large Language Models

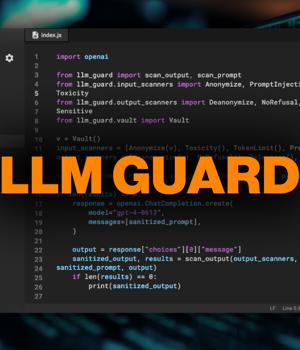

LLM Guard is a toolkit designed to fortify the security of Large Language Models.

It provides extensive evaluators for both inputs and outputs of LLMs, offering sanitization, detection of harmful language and data leakage, and prevention against prompt injection and jailbreak attacks.

LLM Guard was developed for a straightforward purpose: Despite the potential for LLMs to enhance employee productivity, corporate adoption has been hesitant.

"We want this to become the market's preferred open-source security toolkit, simplifying the secure adoption of LLMs for companies by offering all essential tools right out of the box," Oleksandr Yaremchuk, one of the creators of LLM Guard, told Help Net Security.

"LLM Guard has undergone some exciting updates, which we are rolling out soon, including better documentation for the community, support for GPU inference, and our recently deployed LLM Guard Playground on HuggingFace. Over the coming month, we will release our security API, focusing on ensuring performance with low latency and strengthening the output evaluation/hallucination," Yaremchuk added.

Whether you use ChatGPT, Claude, Bard, or any other foundation model, you can now fortify your LLM..

News URL

https://www.helpnetsecurity.com/2023/09/19/llm-guard-open-source-securing-large-language-models/